Preface

This post is an extended version of my previous post in which the following has been added: (1) a short introduction to Shannon's definition of information, (2) an in-detail explanation of the method used to obtain the results and (3) a concluding interpretation of observations.Overview

I want to share a discovery, I've made this year after a good deal of occupation with Shannon's Information Theory (see here for his constituting paper). It seems to be the case, that the latin alphabet (font-typeface) consisting of letters 'a' to 'z' when rasterized and averaged into one letter, shows patterns formed from areas with alternating highs and lows in information-content.To expose these patterns one has to examine the alphabet in a special way - i. e. each letter has to be rasterized into pixels and then taken into account to form an averaged value of information conveyed by each and every subpixel (-position) of which the letter is composed. The resulting map is called the Information-Heatmap of the Alphabet.

The prospect of this new method is, that it can be transferred to the field of Natural Language Processing (NLP), where it can serve as a mean to isolate the areas of high information density contained in natural spoken language. Or even find the important information within documents, when the document can be seen as member of an 'alphabet' made up of all documents.

Introduction

According to Shannon's definition, the Information given by every one character of a given set of characters (when all characters have equal probability of appearance) is defined via the number of yes/no-decisions (aka "bits") needed to get to the selected character when starting with the whole character-set. To divide the alphabet in an according way you best use a binary decision tree, whos depth (which stands for the number of yes/no-decisions) is calculated by the binary logarithm (= logarithm with base 2).Shannons original Formula uses the probability of appearance of each character, but when all characters have the same probability of appearance, the information can be calculated by using a much simpler method: the binary logarithm applied to the size of the character-set.

"Stumbling block": does information content differ on varying levels of abstraction?

When looking at an alphabet on letter level according to Shannon's Formula, the information contained in every letter is: log2(26) ≈ 4.7 bitBut when representing every letters by its e.g. 7x9 rasterized pixel-matrix, every letter should contain 7x9=63 pixels, so since every pixel has one bit of information - as we've learned in "CS 101"-course, altogether we get 63 bit per letter, right?!

In short - the answer is: not at all! - the information of every letter on the pixel-level is still 4.7 bit, i.e. it is the same as on letter-level! - Why is this so? - After all there hasn't been added any new information, we have just been switching the perspective...

So you may say: to represent the letters on pixel-level more space on your hard-disk ist used - 63 bit for every letter! - yes. that's true, but... - you could use a compression-algorithm, that would zip your letters into the original size.

Why is this so?! - this is due to Redundancy, that means that the surplus in information is 'unnecessary information' that isn't really needed to recognize the letter; i.e. you can complete the letter from knowing only some bits - all the other bits can be inferred, and hence do not count as new and hence additional information.

Remark: Redundancy as defined by Shannon equals to the ratio of the actual information per letter (4.7 bit) and the theoretically maximum possible information per letter. It shows that natural language has about 50% Redundancy.

Maybe the following thought-experiment makes things a little bit more explicit: every letter of your alphabet is formed by a special pattern of pixels; altogether there are 263 possible on/off-constellations of the 63 pixels. But not all possible combinations of pixels go into your alphabet as a "letter", because its overall size is limited to 26; this means, that when you look at only some of the pixels of any given letter of your alphabet, the remaining ("not looked at") pixels of the letters cannot show up arbitrarily, their pixel-value somehow is already determined! (or put it another way: the pixels of the letters are correlated)

Example: Letter 'A' - even without uncovering, we know, it's an 'A' (note that the reason, it cannot be a 'P' is, because a 'P' needs one pixel more in the upper left corner - compare with above font-listing)

So there are only 26 fixed constellations of pixels (aka "letters"). They can be identified in its entirety by only knowing a few pixels of them (it turns out: in median knowing 4.7 pixels... :)

And that sums up to the real information contained in one letter, which amounts to the same number, we already know from the alphabet-level-abstraction: 26 letters - every one gives log2(26)≈ 4.7... bit of Information.

Remark: since this definition of Information by Shannon is based on the ability to differentiate one letter from another, we here call this kind of Information the "differentiating" aspect of Information.

Arising Question

If the information stays the same on every level of abstraction, then how is the information from the upper (more abstract) level distributed among the pixels of the subordinate level?So, when every letter contains only 4.7 bit of information - even when viewed on the pixel-level - then: how are these 4.7 bit distributed over the 63 pixel(position)s???!

Are they evenly distributed over all pixels (so that every pixel gets the same 4.7/63≈0.075 bit?),

or are they distributed unequally (maybe somehow constrained by the shape of the letters...)?

Basic Approach: Mean-Pixel-Value

As a first approach, we could just average the pixel values for every of the 63 positions throughout all 26 letters (as can be seen in the figure on the left side).

This would produce some median value between 1 (black pixel) and 0 (white pixel) for every of the 63 pixel-positions. But consider the following case for one specific pixel-position: it is white for every letter, which means, that it stays white in the median; the same is true for a pixel-position that is black for every letter - it will stay black in the median. This is due to the definition of calculating the median-value.

But now let's reflect, what it means for a pixel-position to be black all the time, i.e. for every letter this specific position stays black: this means nothing less than, the particular pixel conveys no information at all! The same is true for an all time white position.

Both yield zero information, because that's the definition of information: news-value - both positions hold zero "news-value", because their values are determined, and therefore expectable.

Remark: their appearance-probability is 100%, and that's one reason Shannon introduced the logarithm in his formula - a letter with probability 1 yields log2(1)=0, i.e. zero information-value.

So just averaging over pixel-values gives mean pixel-value, not mean information-value! What can we do to calculate the real median information contained in each pixel position of the letters?

Example: The following (in part covered) letter can be a 'P' or an 'R'. With uncovering of one (specific) pixel, we get enough information to judge it's an 'R'.

So this pixel-position gives us an information-value increase of 1 bit, because the number of possible letters has been shrunken from 2 possibilities to 1 possible letter - and therefore the information-yield is log2(2/1) = 1 bit.

This would produce some median value between 1 (black pixel) and 0 (white pixel) for every of the 63 pixel-positions. But consider the following case for one specific pixel-position: it is white for every letter, which means, that it stays white in the median; the same is true for a pixel-position that is black for every letter - it will stay black in the median. This is due to the definition of calculating the median-value.

But now let's reflect, what it means for a pixel-position to be black all the time, i.e. for every letter this specific position stays black: this means nothing less than, the particular pixel conveys no information at all! The same is true for an all time white position.

Both yield zero information, because that's the definition of information: news-value - both positions hold zero "news-value", because their values are determined, and therefore expectable.

Remark: their appearance-probability is 100%, and that's one reason Shannon introduced the logarithm in his formula - a letter with probability 1 yields log2(1)=0, i.e. zero information-value.

So just averaging over pixel-values gives mean pixel-value, not mean information-value! What can we do to calculate the real median information contained in each pixel position of the letters?

A good point to start is the above observation, that, when just uncovering parts of an otherwise covered up letter, the likelihood, which letter it is about, grows with every more pixel uncovered!

So the algorithm for calculating the pixel-based mean-information uncovers pixel by pixel, while in parallel analyzing on the letter-level, just how likely this constellation makes this and that letter (i.e. the letters that remain possible, when completely uncovering the current pixel-constellation).Example: The following (in part covered) letter can be a 'P' or an 'R'. With uncovering of one (specific) pixel, we get enough information to judge it's an 'R'.

So this pixel-position gives us an information-value increase of 1 bit, because the number of possible letters has been shrunken from 2 possibilities to 1 possible letter - and therefore the information-yield is log2(2/1) = 1 bit.

We now want to generalize this principle into a method, that can calculate the "true" information-value-contribution conveyed by every single pixel-position. Therefore, we need to lay a path through all sub-pixels of the letter. The most obvious path is to uncover pixel by pixel beginning top-left line-by-line, ending bottom-right.

But, before we can do this, we need to make a short excurse into the specific method, Shannon used to calculate Information.

The Shannon-Parabola: Mean-Information-Value

This is a short Excursus into Shannon's Original-Paper 'A Mathematical Theory of Communication', from which the figure on the right side is taken:

It shows how an 'Alphabet' consisting of two 'letters'. These two letters give varying (median) information-content, depending on their appearance-probabilities.

When both letters are equal probable (i.e. 50/50 percent) then, there's a maximum of possible information "transported" within each letter. Whenever one letter appears more frequent than the other, then the median information is declining - this is due to the above formula, which states in the two-letter-case:

p*log2(p)+(1-p)*log2(1-p)

resulting in a parabola. As an explanation consider, that the more often you receive an information (letter) the more its "information-value" declines, while in contrary the more rare an information (aka letter) is, the more "value" it has for you.

And in this special case of two letters, inclining the information content of one letter (by declining its probability) at the expense of the other, doesn't pay out in terms of information conveyed by both letters in median. The median declines when deviating from tie, similarly to the mathematical fact, that a rectangular with given perimeter gives maximum area, when it's a square, i.e. both sides are equal in length.

Now let's transfer this insight to our scenario. We already have an alphabet with exactly two 'letters': white and black pixel! So consider a pixel-position, that is white in 50% of all letters, and black in the other 13 cases, then we have a maximum information for that pixel, which sums up to 1 bit!

But consider the case, that on a specific pixel-position, e.g. only in a quarter of all letters have a black colored pixel, and a white colored in all other cases, then according to Shannon's above 'Parabola-Formula' this specific pixel-position accounts for 0.81 bit roughly in the median.

Remark: It's important to understand, that not only black/'turned-on' pixels (value='1') deliver information (which some people tend to assume intuitively at first), but that also white/'turned off' pixels (value='0') contribute to information-gain in the same manner.

x

So, what we need is a computational method that doesn't disregard the correlation between sub-pixels of any given letter. Since this correlation is mediated on the letter-level, not on the pixel-level, the letter level has to be somehow incorporated into this algorithm.

Path-Uncovering-Algorithm: Correlated Information-Value

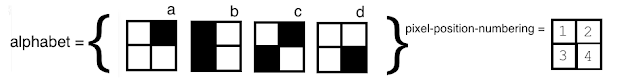

To not complicate the underlying idea, we introduce a small example alphabet made up from four letters: a, b, c, d. Note that the pixel positions are numbered from 1 to 4 for ease of referencing of the pixels during the process of inspecting them.

So, e.g., when we start with pixel-position №1 and uncover a '0' then letter 'b' becomes impossible therefore leaving only letters 'a','c','d' among possible outcomes. These possible outcomes are plotted in red in the figure below.

Since three letters have a '0' on position №1, the probability for uncovering a '0' on this specific position is 3/4. Accordingly the probability for uncovering a '1' is 1/4. Those probabilities are plotted in black.

Now let's have a look at the question how much information we have gained by uncovering that one pixel.

The approach is, to look how much information this one pixel delivers on alphabet-level, because that is our 'intended' level of abstraction. So the amount of information says how much more distinctness this one uncovered pixel delivers, about what letter it is about.

Therefore we use Shannons formula from above: a letter gives us an information-value of the binary logarithm applied to the reciprocal value of its appearance-probability.

So our probability for uncovering on pixel-position №1 value '1' ist 3/4 and for uncovering '0' is 1/4 and hence our information-gain for "1" rpt. "0" are log2(4/3)≈ 0.42 rpt. log2(4/1) = 2.0 ! (see tree below). These values are plotted in blue for every branch of the tree.

Now we repeat this procedure for the next pixel to be uncovered (in this case position №2). Note that from now on, all probabilities along the path are conditional probabilities reflecting the context of already uncovered pixels.

So after having gone through all pixel-positions sequentially, and adding up their information-gain-values should result in the same amount of information - 2 bit - for every path. That's btw. the information-content of every letter, when calculated on the alphabet-level ( log2('size of alphabet')). - Convince yourself by adding the values along the four paths (their sums are plotted at the end of each path in blue).

Averaging over all Paths

As it turns out, considering just one uncovering path, results in a very biassed view, because the outcome is extremely dependent on the chosen path. Suppose you start your uncovering-path with pixel-position №2 - it will give you 1 bit of information (because of black/white - 50/50, you know...). In contrast, when pixel-position №2 is uncovered as second pixel in a sequence, as can be seen in the tree above, it will give you only (1.58+0.58+0.58+0)/4 ≈ 0.685 bit - remember there are 4 paths, leading to 4 leafs, that's why there's a divisor 4). This implies, that the information-value given by a single pixel-position depends to a great extend on the uncover point in time: the earlier it is uncovered, the more information it accounts for, generally...

So, as a consequence we should consider as many uncover-paths (aka permutations of sub-pixels) as possible and average the obtained per-pixel-values afterwards...

Since we have not the computational power to compute all possible paths - which has factorial complexity -, we use a random subset of all possible permutations, and trust that it will serve as a good enough approximation for all permutations (that's the reason why there's a function called NthPerm()in the code below, which helps skipping all unregarded permutations).

Maybe one question comes to your mind: Why should it make sense to look at arbitrarily shaped uncover-paths? Isn't it more natural to uncover adjacent pixels from left to right, like when reading?

Answer: Yes, we should examine this in one of the next posts, but for now consider the following scenario: you're 'zooming-in' from far away - where you can see only coarse blurred letters on a document. Slowly the letters become readable; sub-pixel by sub-pixel becomes distinguishable, while resolution increases. In this scenario there is no special sequence or order by which the pixels become visible. The succession is random! So using as many as possible permutation-paths and afterwards averaging over them, seems like a good idea!

Since we have not the computational power to compute all possible paths - which has factorial complexity -, we use a random subset of all possible permutations, and trust that it will serve as a good enough approximation for all permutations (that's the reason why there's a function called NthPerm()in the code below, which helps skipping all unregarded permutations).

Maybe one question comes to your mind: Why should it make sense to look at arbitrarily shaped uncover-paths? Isn't it more natural to uncover adjacent pixels from left to right, like when reading?

Answer: Yes, we should examine this in one of the next posts, but for now consider the following scenario: you're 'zooming-in' from far away - where you can see only coarse blurred letters on a document. Slowly the letters become readable; sub-pixel by sub-pixel becomes distinguishable, while resolution increases. In this scenario there is no special sequence or order by which the pixels become visible. The succession is random! So using as many as possible permutation-paths and afterwards averaging over them, seems like a good idea!

Result

If for a rastered alphabet of letters the information of every subpixel is measured and mapped, then a pattern built of peaks with high information, and plains with low information is produced.

The more cycles (aka paths) we run, the clearer the structure made up from peaks and plains becomes.

It looks like the 'plains' are arranged in some kind of raster - more than the 'peaks' are so;

This means we have a raster-structure formed by the areas of Redundancy(=low information) - we call it redundancy-structure.

Crosscheck

To rule out, that all of these patterns are a byproduct of the algorithm itself - some kind of digital 'moirée'-effect - we use some other 'fonts' for cross-checking. One alphabet contains symbols, like the infamous 'Dingbats'-Font, while a second one just contains random pixels, albeit with a share of black pixels limited to 17% - about the same frequency, as found in the VeraMono-Font.

While the Symbol-Font yields a plateau with nearly no structure, the Random-Font delivers a maybe fine-grained version of the VeraMono-case - but it can also be, that with a higher number of cycles it will level out to a plateau like in the Entypo-case. To clarify this we need to run the simulation for more cycles on a more powerful machine.

Implications and Interpretation

The hypothesis is, that alphabets formed this 'raster'-way work best for the human cognitive apparatus: Any 'good readable' font-alphabet - at least one that is 'good readable' for humans - should contain in its Information-Heatmap an evenly-distributed count of information-peaks and they should be 'in balance' with their corresponding redundancy-plains, which should form a raster-structure.

The well distributed alternating areas serve two complementary purpose: the areas with high information-concentration are used for actual information-transmission, while the low-level-information-areas serve as a kind of positioning-system, stabilizing the 'scanning'-frame (like e.g. the squares found in QR-Codes).

Note: It's also interesting, that the Heatmap resembles a 7-segment-display. That maybe the reason why 7-segment-displays can be used to depict letters from an alphabet as well as numbers.

You could go further and postulate two kinds of information: distinctive information (the original information by shannon) and positional information (the original redundancy by shannon).

Distinctive information helps differentiating, while positional information has a stabilizing effect while reading.

The latter is the necessary foundation for the former thus enabling the transfer of information.

The positional information shall be distributed spaciously and evenly over the area of the letter, such that an observer always finds a good origin to start with, regardless of where (s)he looks at.

Remark: the Heatmap always exhibits a structure that somehow reflects, how an alphabet is constructed. Since the latin alphabet has been designed with redundancies in mind (as can be seen in the following figure) it's no wonder that the heatmap shows a pattern built from alternating areas: information peaks and redundancy plains.

You could go further and postulate two kinds of information: distinctive information (the original information by shannon) and positional information (the original redundancy by shannon).

Distinctive information helps differentiating, while positional information has a stabilizing effect while reading.

The latter is the necessary foundation for the former thus enabling the transfer of information.

The positional information shall be distributed spaciously and evenly over the area of the letter, such that an observer always finds a good origin to start with, regardless of where (s)he looks at.

Distinctive information helps differentiating, while positional information has a stabilizing effect while reading.

The latter is the necessary foundation for the former thus enabling the transfer of information.

The positional information shall be distributed spaciously and evenly over the area of the letter, such that an observer always finds a good origin to start with, regardless of where (s)he looks at.

Agenda

- get machine with more RAM, to speed up computations and allow for greater depth-resolution of heatmap (= more calculated paths/cycles)

- examine other font-typefaces (e.g. chinese), additionally use bigger raster-resolution

- find some kind of (positional) 'normalization' to use with non-monospaced fonts

- formulate equation for equilibrium of redundancy- and information-structure in heatmap (rpt. the stabilizing vs. differentiating aspects of information)

- prove convergence of Heatmap-Algorithm

- use algorithm to build information-lens that can focus on letter-boundaries in otherwise unstructured document (this is done by varying the boundaries until the heatmap shows a sharp pattern of information maxima and minima) - this is equivalent to finding the 'intended' abstraction-level within a document

- build some kind of 'Huffmann-Weight-Matrix' that gives higher weights to sub-pixels with higher information-value

- use in conjunction with word2vec, to determine building-blocks within natural language to extract hierarchical knowledge-representations

- form a team to participate in ai x prize (wild card)

Links

[1] http://worrydream.com/refs/Shannon%20-%20A%20Mathematical%20Theory%20of%20Communication.pdf[2] https://github.com/rougier/freetype-py

[3] https://de.wikipedia.org/wiki/Welle (Welle, Phrood)

[4] http://luc.devroye.org/IakovChernikhov--ConstructedAlphabets.jpg (constructed alphabet)

Thanks

to Roman P. for reading drafts of this

Installation

(1) download and install Anaconda (Python + Jupyter Notebook) here

(2) download and unzip and install latest Freetype lib here[use a command-line terminal]

$ cd ./Downloads/freetype-2.8.1/

$ ./configure

$ make

$ make install (or sudo make install)

$ pip install freetype-py(5) start up jupyter notebook

$ jupyter notebookCode

import matplotlib.pyplot as plt

from math import log, factorial

import numpy as np

import itertools

import sys

import time

import random

%matplotlib inline

def sigirinc(a, chk, depth, p, per, d, z):

b = []

for x in a:

if d[x][per[depth]] == chk:

b.append(x)

if len(b) > 0:

p[per[depth]]=log(len(a)*1./len(b),2)

if depth == len(d[0])-1:

assert (len(b)==1),"more than one element at end of path!!!"

z[b[0]] = p

else:

c = list(b)

q = list(p)

sigirinc(b, 0, depth+1, p, per, d, z)

sigirinc(c, 1, depth+1, q, per, d, z)

def checkalph(d, anz):

s = range(len(d))

zi = []

counter = 0

gesamt = factorial(len(d[0]))

if(factorial(len(d[0]))<anz):

anz = factorial(len(d[0]))

step = int(factorial(len(d[0]))//anz)

for x in xrange(anz):

i = random.randint(0,gesamt-1)

permi = []

nthPerm(i, list(range(len(d[0]))), permi)

p=list(range(len(d[0])))

q=list(range(len(d[0])))

z = list(range(len(s)))

sigirinc(s, 0, 0, p, permi, d, z)

sigirinc(s, 1, 0, q, permi, d, z)

zi.append(z)

counter += 1

if counter%(anz/100)==0:

sys.stdout.write("%s" % '.')

sys.stdout.flush()

return zi

def printheatmap(a,zzz,x,y):

lll = []

for zz in zzz:

zz_ = list(itertools.chain.from_iterable(zz))

i = np.mean(zz_, axis=0)

lll.append(i)

print(np.array(lll).reshape(x,y))

plt.imshow(np.array(lll).reshape(x,y), cmap=plt.cm.binary, interpolation='none')

plt.colorbar()

plt.show()

def nthPerm(n,elems,z):

if(len(elems) == 1):

z.append(elems[0])

else:

sizeGroup = factorial(len(elems)-1)

q,r = divmod(n,sizeGroup)

v = elems[q]

elems.remove(v)

z.append(v)

nthPerm(r,elems,z)

def printAlphabet(z, h, w):

Z = np.array(z)

fig = plt.figure(figsize=(20, 20*Z.shape[0]/float(Z.shape[1])))

for a in range(len(z)):

ax = fig.add_subplot(1,len(Z),a+1)

c=np.pad(Z[a].reshape(h,w), ((0,1),(0,1)), mode='constant')

ax.imshow(c, interpolation='none', cmap=plt.cm.gray_r)

plt.xticks([]), plt.yticks([])

plt.show()

from freetype import *

def bits(x):

data = []

for i in range(8):

data.insert(0, int((x & 1) == 1))

x = x >> 1

return data

def loadRasterFont(f, size, ZZ):

face = Face(f)

face.set_char_size( size*64)

letters = 'abcdefghijklmnopqrstuvwxyz'

upper, lower, prae, post = 0, 0, 0, 0

### first pass

for each in letters:

face.load_char(each, FT_LOAD_RENDER | FT_LOAD_TARGET_MONO )

bitmap = face.glyph.bitmap

upper = max(upper, face.glyph.bitmap_top)

lower = max(lower, bitmap.rows-face.glyph.bitmap_top)

prae = max(prae, face.glyph.bitmap_left)

post = max(post, bitmap.width-face.glyph.bitmap_left)

he = upper+lower

wi = prae+post

print (he, wi)

### second pass

for each in letters:

face.load_char(each, FT_LOAD_RENDER | FT_LOAD_TARGET_MONO )

bitmap = face.glyph.bitmap

data = []

for i in range(bitmap.rows):

row = []

for j in range(bitmap.pitch):

row.extend(bits(bitmap.buffer[i*bitmap.pitch+j]))

data.extend(row[:bitmap.width])

Z = np.zeros((he,wi), dtype=np.ubyte)

w,h = bitmap.width, bitmap.rows

x,y = prae-face.glyph.bitmap_left, upper-face.glyph.bitmap_top

Z[y:y+h,x:x+w] += np.array(data, dtype='ubyte').reshape(h,w)

ZZ.append(Z.reshape(he*wi).tolist())

plt.figure(figsize=(5,5))

plt.imshow(Z, interpolation='none', cmap=plt.cm.binary)

plt.xticks([]), plt.yticks([])

plt.show()

return wi, he

ZZ=[]

try:

xrange

except NameError:

xrange = range

cycles = 10000

width, height = loadRasterFont('./VeraMono.ttf', 36, ZZ)

printAlphabet(ZZ, height, width)

t = time.time()

zz = checkalph(ZZ, cycles)

zzz = []

zzz.append(zz)

printheatmap(ZZ,zzz,height, width)

print(time.time()-t)