Introduction

In our previous post it was shown how to calculate the "Shannon Information" given by one single pixel of an arbitrary alphabet-letter. In this post we're going to examine how every pixel additionally delivers extra information about its position within the letter - its "Positional Information".

Result

In the following the resulting heatmap is shown. For every pixel its value shows how much information the uncovering of this specific pixel contributes to the increasing knowledge about the absolut positioning of the uncovered letter during the uncovering process. Note that the values are averaged over a big number of uncovering-paths.

Algorithm

The Algorithm ist based on the one from the previous post. That means that a random path per[] (provided by a permutation of all pixel-positions) through all pixels of the letter is chosen. We act on the assumption, that the letter which will be uncovered is known, and we are only interested in its absolute positioning - which we don't know at the beginning.

The Differences of both Algorithms are shown in the following code comparison:

① only one letter (bux) ist examined at a time, - for every possible frame-offset-position x of the list a of possible frame-offsets, it is checked, whether the relative (to the frame-start-position) pixel-position at per[depth] does match the uncovered pixel-value chk. If so, it's appended to a new list b.

② This new list b will then be used to calculate the information given by uncovering a pixel of value chk at position per[depth] (calculation is done via logarithm dualis of it's inverse probability).

③ Before adding the chain of pixel-probabilities along the uncover-path into the result-collector-list z[], they have to be rotated for b[0]-per[0] positions, because that was the difference between the tentative pixel-position of the first pixel uncovered at the start and the resulting real position in the end. So with this shift it's made sure, that every contribution to the positional information belongs to the right absolute pixel-position.

Note that even if every path begins with multiple possible different pixel-positions, in the end every pixel will be rotated to its correct position, and every pixel contributes the full uncover-information, regardless of how many pixels are involved - the information will not be partitioned among them.

Sample

Consider the following - very rudimentary - letter "A" made up from 3x4 pixels:

We enumerate its Positions from 0 to 11 line-by-line, starting top-left.

In the beginning the letter is completely covered with a (green :) opaque sheet, and we know nothing about the underlying letters absolute positioning.

We choose a random path through all pixels of the uncovered letter and uncover them one by one. As shown in white, for the first three steps 5 - 6 - 0 - ..., in the following picture:

Because we know nothing about the positioning of our underlying letter and therefore must make assumptions about its absolute positioning (shown in blue), possibly we think, that our our first pixel uncovered maybe pixel № 4,

(red circle) but in "reality" it's pixel № 9,

like shown in the following figure:

By the way: in our model the pixel-matrix is continued in all four directions, so that there's no abrupt border or end of letter - this is reflected in the code by using the modulo-operator (line- and row-wise).

In the following sample tree, uncovering-probabilities for the first step are calculated:

In the end we will have cumulated the Information that gives us the exact positioning of the first uncovered pixel; and since there are 12 possible absolute positions, the cumulated information gathered in the end will be ld(12) ≈ 3.58 bit.

~

Lets reconsider, what it means, if we uncover the first pixel along the uncovering-path, and its a white one: it means that the underlying letter "A" can be positioned in "any" position under this pixel, but it has to be guaranteed, that a white pixel appears exactly under the uncovered pixel;

But which of the many "white" pixels of the letter "A" is it? - we don't know, but we know the number of white pixels within "A", and therefore the probability. This probability will be converted into an information-value according to Shannon's Formula (and the one from Laplace also ;) :

So now when uncovering the next (second) pixel, its not hard to see that now the probabilities for white rpt. black pixel will be calculated not only by the count of still uncovered white rpt. black pixels but those of them who are at the right position relative to the first uncovered pixel-value.

Now that delivers the following tree, when continuing until step 3 in pixel-uncovering-path (for letter "A"): Position 5 - Position 6 - Position 0 - ...

Application

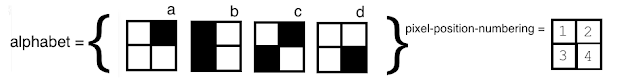

To test whether it's a meaningful measure, we look at a two-letter-alphabet consisting of letters similar to the markers of "QR-Codes" (TM Denso Wave)

When looking at the shannon heatmap, it's obvious, that the only pixel delivering information is the one in the center, because it's the only difference between both letters.

The positional heatmap shows, that the white "donut"-shaped ring contains the most positional information - this means, that these pixels help most in identifying the absolut position of the pixels uncovered.

It's somehow striking, that the positional information and the shannon information are mostly seperated. (i.e. the "donut" and the middle pixel have little overlap).

~

Conclusion

If you compare that artificial qr-code-alphabet with a somehow more "natural" alphabet, like the normal latin alphabet, you can see, that there's less separation, and more overlap.

When comparing per-pixel value heatmaps of Shannon Information from the previous post with Positional Information from this blog-post, at the first look it seems that both types of information somehow complement each other.

A possible interpretation is, that for humans a "normal" alphabet is more easily readable, because both types of information are in the same place, i.e. more "findable".

Or put it more abstract: In a visual setting, to effectively transmit information, it takes some redundancy. This redundant pixels are used as a kind of "marker" to obtain the exact position of the information-bearing pixels.

Note that this redundancy is not identical with the redundancy needed to transmit information in a "noise channel".

Insight

There's a relationship between Shannon- and Positional-Information.

The similarity of the letters provides only the basis upon which their difference can be recognized. Without the positional information as to where the pixel is located, from which I get disparity information in the form of a white or black pixel, I can not get such disparate information either.

Or put it in other words - as my former instructor at University once wrote in his Blog:

"The observer (creator) of the data is interested in melodies that are unfamiliar enough to contain somewhat unexpected harmonies or beats etc., but familiar enough to allow for quickly recognizing the presence of a new learnable regularity or compressibility in the sound stream: a novel pattern! " (s. http://people.idsia.ch/~juergen/creativity.html)

This addresses the balance between positional and differential information - the former is the familiar Shannon-Information, while the latter is the Positional Information, calculated above.

Remarks

Note that in our main-example the median information per pixel of both heatmaps is in the same magnitude, because the number of alphabet-letters doesn't differ much from the number of pixel-positions. - This is not always the case.

Further work is needed to make the algorithm robust against cases, where a letter will be mapped onto itself when shifted about some pixels, so that its absolute position cannot be determined. The same situation occurs, when two letters are merely a deferment of each other.

B.t.w. this is the reason why it's so complicated for the human eye to count a long row of equidistant dots.............................................................

Outlook

In the next post we will look at how well Positional and Shannon-Information play together - something along the lines of : "the more differential (i.e. Shannon) information a pixel contains, the less positional information it can contain, and vice versa" - and give an algorithm for unsupervised document-segmentation into letters and alphabet-extraction.

Code

You can either download a Jupyter Notbook here,

or copy the following code...

import matplotlib.pyplot as plt

from math import log, factorial, fmod

import numpy as np

import itertools

import random

import sys

import time

%matplotlib inline

def sigirinc(bux, a, chk, depth, p, per, d, z):

b = []

for x in a:

if d[bux][int(fmod(x+per[depth]-per[0]+len(d[0]), len(d[0])))] == chk:

b.append(x)

if len(b) > 0:

p[per[depth]]=log(len(a)*1./len(b),2)

if depth == len(d[0])-1:

assert (len(b)==1),"more than one element at end of path!!!"

n = b[0]-per[0]

z.append(p[-n:] + p[:-n])

else:

c = list(b)

q = list(p)

sigirinc(bux, b, 0, depth+1, p, per, d, z)

sigirinc(bux, c, 1, depth+1, q, per, d, z)

def checkalph(d, anz):

posi = range(len(d[0]))

zi = []

counter = 0

gesamt = factorial(len(d[0]))

if(factorial(len(d[0]))<anz):

anz = factorial(len(d[0]))

for x in xrange(anz):

i = random.randint(0, gesamt-1)

permi = []

nthPerm(i, list(range(len(d[0]))), permi)

for a in range(len(d)):

p=list(range(len(d[0])))

q=list(range(len(d[0])))

z = []

sigirinc(a, posi, 0, 0, p, permi, d, z)

sigirinc(a, posi, 1, 0, q, permi, d, z)

zi.append(z)

counter += 1

if counter%(anz/100)==0:

sys.stdout.write("%s" % '.')

sys.stdout.flush()

return zi

def printheatmap(a,zzz,x,y):

lll = []

for zz in zzz:

#hat geholf verstnis print("x")

zz_ = list(itertools.chain.from_iterable(zz))

i = np.mean(zz_, axis=0)

lll.append(i)

plt.imshow(np.array(lll).reshape(x,y), cmap=plt.cm.binary, interpolation='none')

plt.colorbar()

plt.show()

def nthPerm(n,elems,z):

if(len(elems) == 1):

z.append(elems[0])

else:

sizeGroup = factorial(len(elems)-1)

q,r = divmod(n,sizeGroup)

v = elems[q]

elems.remove(v)

z.append(v)

nthPerm(r,elems,z)

font_x=7

font_y=9

###63 (7x9)

dd=([0, 1, 1, 1, 1, 1, 0, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1],

[1, 1, 1, 1, 1, 1, 0, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 0, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 0],

[0, 1, 1, 1, 1, 1, 0, 1, 0, 0, 0, 0, 1, 1, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1, 1, 0, 1, 1, 1, 1, 1, 0],

[1, 1, 1, 1, 1, 1, 0, 1, 0, 0, 0, 0, 1, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 0],

[1, 1, 1, 1, 1, 1, 0, 1, 0, 0, 0, 0, 1, 1, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 0],

[1, 1, 1, 1, 1, 1, 0, 1, 0, 0, 0, 0, 1, 1, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0],

[0, 1, 1, 1, 1, 1, 0, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 0, 1, 1, 1, 1, 1, 0],

[1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1],

[1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1],

[0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 0, 1, 1, 1, 1, 1, 0],

[1, 0, 0, 0, 0, 1, 1, 1, 0, 0, 0, 0, 1, 0, 1, 0, 0, 0, 1, 0, 0, 1, 0, 0, 1, 1, 0, 0, 1, 1, 1, 1, 0, 0, 0, 1, 0, 0, 1, 1, 0, 0, 1, 0, 0, 0, 1, 0, 0, 1, 0, 0, 0, 0, 1, 0, 1, 0, 0, 0, 0, 1, 1],

[1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 1, 0],

[0, 1, 1, 0, 1, 1, 0, 0, 1, 1, 1, 1, 1, 0, 1, 0, 0, 1, 0, 0, 1, 1, 0, 0, 1, 0, 0, 1, 1, 0, 0, 1, 0, 0, 1, 1, 0, 0, 1, 0, 0, 1, 1, 0, 0, 1, 0, 0, 1, 1, 0, 0, 1, 0, 0, 1, 1, 0, 0, 1, 0, 0, 1],

[0, 1, 1, 0, 0, 0, 1, 1, 1, 1, 0, 0, 0, 1, 1, 0, 1, 1, 0, 0, 1, 1, 0, 1, 1, 0, 0, 1, 1, 0, 0, 1, 0, 0, 1, 1, 0, 0, 1, 1, 0, 1, 1, 0, 0, 1, 1, 0, 1, 1, 0, 0, 0, 1, 1, 1, 1, 0, 0, 0, 1, 1, 0],

[0, 1, 1, 1, 1, 1, 0, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 0, 1, 1, 1, 1, 1, 0],

[1, 1, 1, 1, 1, 1, 0, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0],

[0, 1, 1, 1, 1, 1, 0, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 1, 0, 0, 1, 1, 0, 0, 1, 1, 0, 1, 1, 0, 0, 0, 1, 0, 1, 1, 0, 0, 0, 1, 1, 1, 0, 1, 1, 1, 1, 1, 0],

[1, 1, 1, 1, 1, 1, 0, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 0, 1, 1, 1, 0, 0, 0, 0, 1, 0, 1, 1, 0, 0, 0, 1, 0, 0, 0, 1, 1, 0, 1, 0, 0, 0, 0, 1, 1],

[0, 1, 1, 1, 1, 1, 0, 1, 1, 0, 0, 0, 1, 1, 1, 0, 0, 0, 0, 0, 1, 1, 1, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 1, 1, 1, 0, 0, 0, 0, 0, 1, 1, 1, 0, 0, 0, 1, 1, 0, 1, 1, 1, 1, 1, 0],

[0, 1, 1, 1, 1, 1, 0, 1, 1, 0, 1, 0, 1, 1, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0],

[1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 0, 1, 1, 1, 1, 1, 0],

[0, 1, 0, 0, 0, 0, 1, 0, 1, 0, 0, 0, 0, 1, 0, 1, 0, 0, 0, 0, 1, 0, 1, 1, 0, 0, 1, 1, 0, 0, 1, 0, 0, 1, 0, 0, 0, 1, 0, 0, 1, 0, 0, 0, 1, 0, 0, 1, 0, 0, 0, 1, 1, 1, 1, 0, 0, 0, 0, 1, 1, 0, 0],

[1, 0, 0, 1, 0, 0, 1, 1, 0, 0, 1, 0, 0, 1, 1, 0, 0, 1, 0, 0, 1, 1, 0, 0, 1, 0, 0, 1, 1, 0, 0, 1, 0, 0, 1, 1, 0, 0, 1, 0, 0, 1, 1, 0, 0, 1, 0, 0, 1, 0, 1, 1, 1, 1, 1, 0, 0, 1, 1, 0, 1, 1, 0],

[1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 1, 0, 0, 0, 1, 1, 0, 1, 1, 0, 1, 1, 0, 0, 0, 1, 1, 1, 0, 0, 0, 1, 1, 0, 1, 1, 0, 1, 1, 0, 0, 0, 1, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1],

[1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 0, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 1, 1, 0, 0, 0, 0, 1, 0, 1, 1, 1, 1, 1, 0],

[1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 0, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1])

### python 2 , 3 hack

try:

xrange

except NameError:

xrange = range

cycles = 100

t = time.time()

zz = checkalph(dd, cycles)

zzz = []

zzz.append(zz)

#dbg print(zzz)

printheatmap(dd,zzz,font_y,font_x)

print(time.time()-t)